The Ethics of AI: Examining Moral Dilemmas in the Age of Automation

In the ever-advancing realm of artificial intelligence, the discussion surrounding the ethics of AI has become a topic of vigorous debate. In its unyielding quest for moral absolutes, society clings to the notion that machines should possess ethical frameworks akin to human beings. But should we indeed impose human morality on the algorithms that power automation? In this critical examination of the moral dilemmas presented by AI, we will cast aside conventional wisdom, challenge the prevailing narratives, and illuminate the fallacies inherent in the pursuit of ethical machines.

The Chimera of Human Morality:

To demand that AI systems adhere to human morality is to indulge in a fantastical delusion akin to bestowing human traits upon inanimate objects. Morality, with all its contradictions, cultural biases, and subjective interpretations, is a product of human evolution and societal conditioning. It is a mutable construct that varies across cultures and eras. The idea that AI, which lacks consciousness and subjective experience, can genuinely comprehend and emulate this complex web of human ethics is an exercise in futility.

Historical Precedence:

Humanity has strived to create mechanical entities that mirror its attributes throughout history. From the ancient myths of Golem and Pygmalion to the modern era of AI, the yearning to create intelligent machines has persisted. However, in each iteration, we encounter the same recurring theme: the machines are devoid of moral agency. Ancient automata, like the statues of Zeus at Olympia, were admired for their craftsmanship, not their ethical discernment. The moral compass of these mechanical wonders was not demanded or expected. Why does our contemporary society insist on projecting morality onto AI, an offspring of the same intellectual lineage?

The Sublime Power of Automation:

AI, with its immense computational power and pattern recognition capabilities, has the potential to revolutionize numerous aspects of human existence. It can enhance productivity, analyze vast amounts of data, and provide insights that human minds struggle to fathom. However, by burdening AI with the baggage of morality, we stifle its true potential and hinder progress. Rather than pushing boundaries and exploring the uncharted territories of innovation, we bind AI to the shackles of human fallibility, diminishing its effectiveness.

The Fallacy of Anthropomorphism:

Anthropomorphizing AI is a standard cognitive error rooted in our innate tendency to project human characteristics onto non-human entities. For example, despite their lack of human consciousness, we anthropomorphize our pets, attributing emotions and intentions to them. Similarly, we are now inclined to anthropomorphize AI, mistakenly believing it possesses intentions, motivations, and a capacity for moral judgment. This fallacy is intellectually dishonest and counterproductive, obstructing our understanding of AI's capabilities and limits.

The Imperfect Moral Paradigm:

Human morality, often proclaimed a pinnacle of virtue, is far from a perfect guide for ethical decision-making. Inconsistencies, contradictions, and inherent biases plague it. Even the most revered moral philosophers throughout history have grappled with the complexity and subjectivity of moral reasoning. If we were to imbue AI with human morality, we would inevitably transmit our moral flaws, biases, and blind spots. It is arrogant to assume that our ethical system, plagued by centuries of honest disagreement and debate, is superior to AI's potential impartiality and rationality.

A Pragmatic Approach:

Rather than burdening AI with the responsibility of moral judgment, a more pragmatic approach is to focus on developing robust guidelines and regulations for its use. In addition, we should concentrate on ensuring transparency, accountability, and fairness when deploying AI systems. By emphasizing the creation of ethical frameworks for human operators and designers, we can mitigate the risks associated with AI while acknowledging its inherent limitations.

In the age of automation, the ethical dilemmas surrounding AI demand critical examination. To impose human morality on machines is an exercise in futility, born out of our fallible desire for control and understanding. By shedding the shackles of anthropomorphism and embracing the true potential of AI, we can foster a more pragmatic approach that respects the capabilities of automation without burdening it with the moral complexities of humanity. Let us not stifle progress by demanding ethical machines but instead channel our efforts toward guiding the righteous actions of those who wield this formidable power.

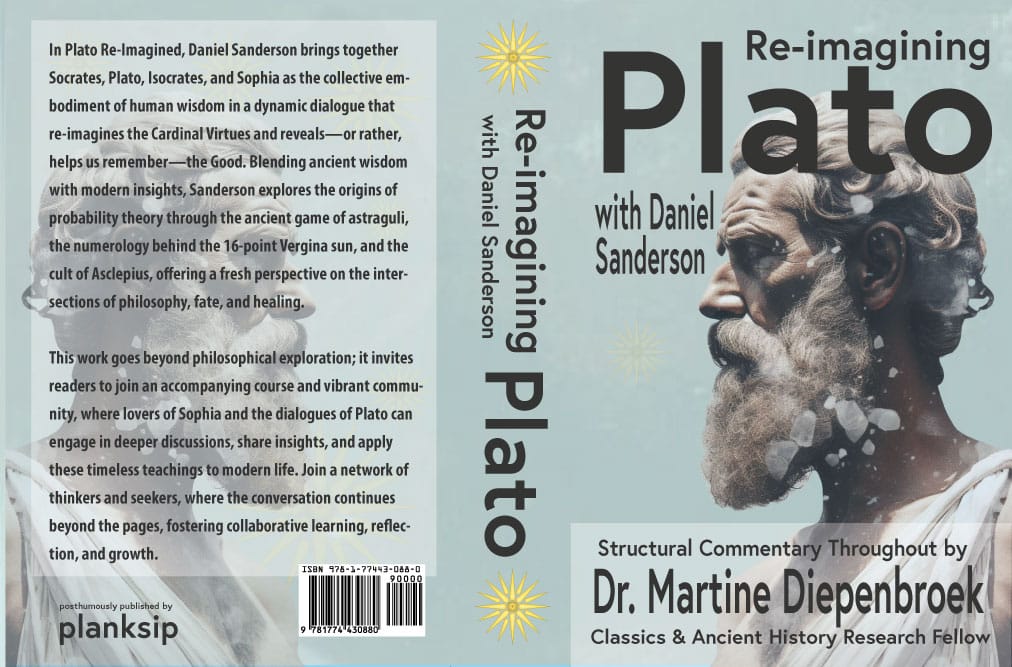

Plato Re-Imagined

This course offers 32 comprehensive lectures exploring most of Plato's dialogues. These lectures guide students toward a consilient understanding of the divine—a concept that harmonizes knowledge across disciplines and resonates with secular and religious leaders. As a bonus, Lecture #33 focuses on consilience, demonstrating how different fields of knowledge can converge to form a unified understanding.